Move to Right [ns3-gym-multiagent, reinforcement learning

(Q-Learning) example]

[Description]

Based on ns3-gym-multiagent

and my previous

move to right example, I wrote this lab. An agent is located at position 0 (state=0) that is trying

to move to position 5 (state=5). The agent can move to left (action=0) or move

to right (action=1). When the agent reaches the position 5 (state=5), the agent

can get the reward 1.0. Otherwise, the agent gets nothing (reward=0.0). I will

show how to use q-learning to make the agent move to right as fast as possible.

In this lab, there are two agents that are trying to move to position 5. Only

when two agents both reach position 5, the work is done.

Note.

If you want to run this example, you need to install ns3-gym-multiagent first.

Then create a directory named move2right-multiagent under scratch. Put the

following codes in this directory.

[mygym.h]

|

/* -*- Mode: C++; c-file-style:

"gnu"; indent-tabs-mode:nil; -*- */ /* * Copyright (c) 2019 * * This program is free software; you

can redistribute it and/or modify * it under the terms of the GNU General

Public License version 2 as * published by the Free Software

Foundation; * * This program is distributed in the

hope that it will be useful, * but WITHOUT ANY WARRANTY; without

even the implied warranty of * MERCHANTABILITY or FITNESS FOR A

PARTICULAR PURPOSE. See the * GNU General Public License for more

details. * * You should have received a copy of

the GNU General Public License * along with this program; if not,

write to the Free Software * Foundation, Inc., 59 Temple Place,

Suite 330, Boston, MA 02111-1307 USA * * Author: ZhangminWang */ #ifndef

MY_GYM_ENTITY_H #define MY_GYM_ENTITY_H #include "ns3/opengym-module.h" #include "ns3/nstime.h" namespace ns3 { /** * \note This is only a test, not use

algorithm */ class MyGymEnv : public OpenGymMultiEnv { public:

MyGymEnv ();

MyGymEnv (Time stepTime);

virtual ~MyGymEnv ();

static TypeId GetTypeId

(void);

virtual void DoDispose ();

Ptr<OpenGymSpace>

GetActionSpace(uint32_t id);

Ptr<OpenGymSpace>

GetObservationSpace(uint32_t id);

bool GetDone(uint32_t id);

Ptr<OpenGymDataContainer>

GetObservation(uint32_t id);

float GetReward(uint32_t id);

std::string GetInfo(uint32_t

id);

bool ExecuteActions(uint32_t id, Ptr<OpenGymDataContainer> action); private:

void ScheduleNextStateRead();

Time m_interval; }; } #endif // MY_GYM_ENTITY_H |

[mygym.cc]

|

/* -*- Mode: C++; c-file-style:

"gnu"; indent-tabs-mode:nil; -*- */ /* * Copyright (c) 2018 * * This program is free software; you

can redistribute it and/or modify * it under the terms of the GNU General

Public License version 2 as * published by the Free Software

Foundation; * * This program is distributed in the

hope that it will be useful, * but WITHOUT ANY WARRANTY; without

even the implied warranty of * MERCHANTABILITY or FITNESS FOR A

PARTICULAR PURPOSE. See the * GNU General Public License for more

details. * * You should have received a copy of

the GNU General Public License * along with this program; if not,

write to the Free Software * Foundation, Inc., 59 Temple Place,

Suite 330, Boston, MA 02111-1307 USA * * Author: ZhangminWang */ #include "mygym.h" #include "ns3/object.h" #include "ns3/core-module.h" #include "ns3/wifi-module.h" #include "ns3/node-list.h" #include "ns3/log.h" #include <sstream> #include <iostream> const int n=2; int mypos[n+1]; int myact[n+1]; namespace ns3 { NS_LOG_COMPONENT_DEFINE ("MyGymEnv"); NS_OBJECT_ENSURE_REGISTERED (MyGymEnv); MyGymEnv::MyGymEnv () {

NS_LOG_FUNCTION (this);

m_interval = Seconds (0.1);

Simulator::Schedule (Seconds (0.0), &MyGymEnv::ScheduleNextStateRead,

this); } MyGymEnv::MyGymEnv (Time stepTime) {

NS_LOG_FUNCTION (this);

m_interval = stepTime;

Simulator::Schedule (Seconds (0.0), &MyGymEnv::ScheduleNextStateRead,

this); } void MyGymEnv::ScheduleNextStateRead () {

NS_LOG_FUNCTION (this);

Simulator::Schedule (m_interval,

&MyGymEnv::ScheduleNextStateRead,

this);

// Notify();

Step (); } MyGymEnv::~MyGymEnv () {

NS_LOG_FUNCTION (this); } TypeId MyGymEnv::GetTypeId (void) {

static TypeId tid

= TypeId ("MyGymEnv")

.SetParent<OpenGymEnv> ()

.SetGroupName

("OpenGym")

.AddConstructor<MyGymEnv> ();

return tid; } void MyGymEnv::DoDispose () {

NS_LOG_FUNCTION (this); } /* Define observation space */ Ptr<OpenGymSpace> MyGymEnv::GetObservationSpace (uint32_t id) {

// uint32_t nodeNum = 5;

uint32_t low = 0;

uint32_t high = 5;

std::vector<uint32_t> shape = {1};

std::string dtype =

TypeNameGet<uint32_t> ();

Ptr<OpenGymBoxSpace>

box = CreateObject<OpenGymBoxSpace>

(low, high, shape, dtype);

NS_LOG_UNCOND ("ID " << id << " MyGetObservationSpace: " << box);

return box; } /* Define action space */ Ptr<OpenGymSpace> MyGymEnv::GetActionSpace (uint32_t id) {

Ptr<OpenGymDiscreteSpace>

discrete = CreateObject<OpenGymDiscreteSpace>

(2);

NS_LOG_UNCOND ("ID " << id << " MyGetActionSpace: " << discrete);

return discrete; } /* Define game over condition */ bool MyGymEnv::GetDone (uint32_t id) {

bool isGameOver = false;

static int stepCounter[n+1] = {0};

stepCounter[id] += 1;

if (mypos[id]==5) { isGameOver = true;

}

NS_LOG_UNCOND ("ID:"<< id << " MyGetGameOver: " << isGameOver

<< " stepCounter:"<< stepCounter[id]);

return isGameOver; } /* Collect observations */ Ptr<OpenGymDataContainer> MyGymEnv::GetObservation (uint32_t id) {

std::vector<uint32_t> shape =

{1,};

Ptr<OpenGymBoxContainer<uint32_t>>

box = CreateObject<OpenGymBoxContainer<uint32_t>>

(shape);

box->AddValue (mypos[id]);

Ptr<OpenGymTupleContainer>

data = CreateObject<OpenGymTupleContainer>

();

data->Add (box);

// Print data from tuple

Ptr<OpenGymBoxContainer<uint32_t>>

mbox = DynamicCast<OpenGymBoxContainer<uint32_t>>

(data->Get (0));

NS_LOG_UNCOND ("Now:" << Simulator::Now

().GetSeconds ());

NS_LOG_UNCOND ("ID " << id << " MyGetObservation: " << data);

//NS_LOG_UNCOND ("---" << mbox);

return data; } /* Define reward function */ float MyGymEnv::GetReward (uint32_t id) {

float reward = 0.0;

if(mypos[id]==5) reward=1.0;

NS_LOG_UNCOND ("ID " << id << " MyGetReward: " << reward);

return reward; } /* Define extra info. Optional */ std::string MyGymEnv::GetInfo (uint32_t id) {

std::string myInfo

= "testInfo";

NS_LOG_UNCOND ("ID " << id << " MyGetExtraInfo: " << myInfo);

return myInfo; } /* Execute received actions */ bool MyGymEnv::ExecuteActions (uint32_t id, Ptr<OpenGymDataContainer>

action) {

Ptr<OpenGymDiscreteContainer>

discrete = DynamicCast<OpenGymDiscreteContainer>(action);

myact[id]=discrete->GetValue();

if(myact[id]==1){ if(mypos[id]==5) mypos[id]=5; else mypos[id]+=1;

} else { if (mypos[id]==0) mypos[id]=0; else mypos[id]-=1;

}

NS_LOG_UNCOND ("ID " << id << " MyExecuteActions: " << myact);

return true; } } // namespace ns3 |

[sim.cc]

|

/* -*- Mode: C++; c-file-style:

"gnu"; indent-tabs-mode:nil; -*- */ /* * Copyright (c) 2018 Piotr Gawlowicz * * This program is free software; you

can redistribute it and/or modify * it under the terms of the GNU General

Public License version 2 as * published by the Free Software

Foundation; * * This program is distributed in the

hope that it will be useful, * but WITHOUT ANY WARRANTY; without

even the implied warranty of * MERCHANTABILITY or FITNESS FOR A

PARTICULAR PURPOSE. See the * GNU General Public License for more

details. * * You should have received a copy of

the GNU General Public License * along with this program; if not,

write to the Free Software * Foundation, Inc., 59 Temple Place,

Suite 330, Boston, MA 02111-1307 USA * * Author: Piotr Gawlowicz

<gawlowicz.p@gmail.com> * */ #include "ns3/core-module.h" #include "ns3/opengym-module.h" #include "mygym.h" using namespace ns3; NS_LOG_COMPONENT_DEFINE ("OpenGym"); int main (int argc,

char *argv[]) {

// Parameters of the scenario

uint32_t simSeed = 1;

double simulationTime = 10; //seconds

double envStepTime = 0.1; //seconds, ns3gym

env step time interval

uint32_t testArg = 0;

uint32_t openGymPort;

CommandLine cmd;

// required parameters for OpenGym interface

cmd.AddValue

("openGymPort", "Port number for OpenGym env. Default: 5555", openGymPort);

cmd.AddValue

("simSeed", "Seed for random

generator. Default: 1", simSeed);

// optional parameters

cmd.AddValue

("simTime", "Simulation time in

seconds. Default: 1s", simulationTime);

cmd.AddValue

("stepTime", "Gym Env step time in

seconds. Default: 0.1s", envStepTime);

cmd.AddValue

("testArg", "Extra simulation

argument. Default: 0", testArg);

cmd.Parse (argc, argv);

NS_LOG_UNCOND ("Ns3Env parameters:");

NS_LOG_UNCOND ("--simulationTime:

" << simulationTime);

NS_LOG_UNCOND ("--envStepTime: "

<< envStepTime);

NS_LOG_UNCOND ("--seed: " << simSeed);

NS_LOG_UNCOND ("--testArg: "

<< testArg);

RngSeedManager::SetSeed (1);

RngSeedManager::SetRun (simSeed);

// OpenGym MultiEnv

Ptr<MyGymEnv>

myGymEnv = CreateObject<MyGymEnv> (Seconds (envStepTime));

for (uint32_t id = 1; id <= 2; id++) { myGymEnv->AddAgentId (id); }

NS_LOG_UNCOND ("Simulation start");

Simulator::Stop (Seconds (simulationTime));

Simulator::Run ();

NS_LOG_UNCOND ("Simulation stop");

myGymEnv->NotifySimulationEnd

();

Simulator::Destroy (); } |

[test.py]

|

#!/usr/bin/env

python3 # -*- coding: utf-8 -*- import argparse from ns3gym import ns3_multiagent_env as

ns3env import numpy as

np import pandas as pd import time N_STATES = 6

# the length of the 1 dimensional world ACTIONS = ['left', 'right'] # available actions EPSILON = 0.9 # greedy police ALPHA = 0.1 # learning rate GAMMA = 0.9 # discount factor MAX_EPISODES = 10 # maximum episodes FRESH_TIME = 0.1 # fresh time for one move # NOTE: This is only a test, not use

algorithm parser = argparse.ArgumentParser(description='Start simulation

script on/off') parser.add_argument('--start',

type=int,

default=1,

help='Start ns-3 simulation script 0/1, Default: 1') parser.add_argument('--iterations',

type=int,

default=MAX_EPISODES,

help='Number of iterations') args = parser.parse_args() startSim = True iterationNum = int(args.iterations) port = 5555 simTime = 10 # seconds stepTime = 0.1

# seconds seed = 1 simArgs = {"--simTime": simTime,

"--stepTime": stepTime,

"--testArg": 123} debug = False env = ns3env.MultiEnv(port=port, stepTime=stepTime, startSim=startSim, simSeed=seed, simArgs=simArgs, debug=debug) # simpler: #env = ns3env.Ns3Env() #env.reset() ob_spaces = env.observation_space ac_spaces = env.action_space print("Observation space: ", ob_spaces,

" size: ", len(ob_spaces)) print("Action space: ", ac_spaces,

" size: ", len(ac_spaces)) stepIdx = 0 currIt = 0 agent_num = 2 def build_q_table(n_states,

actions): table = pd.DataFrame( np.zeros((n_states,

len(actions))), # q_table initial values

columns=actions, # actions's

name ) # print(table) # show table return table def choose_action(state, q_table): # This is how to choose an

action state_actions

= q_table.iloc[state, :] #print("q_table=", q_table) #print("state_actions=", state_actions) if (np.random.uniform() > EPSILON) or ((state_actions == 0).all()): # act non-greedy or state-action have

no value action_name = np.random.choice(ACTIONS) #print("action_name1=", action_name) else: # act greedy action_name = state_actions.idxmax() # replace argmax to idxmax as argmax means a different function in newer

version of pandas #print("action_name2=", action_name) return action_name q_table=[] for ag in range(agent_num):

q_table.append(build_q_table(N_STATES, ACTIONS))

print("q_table[",

ag, "]=", q_table[ag]) try: while True: print("Start iteration: ", currIt) obs = env.reset() print("obs=", obs) #print(" type(obs[0][0][0])=",

type(obs[0][0][0]), " obs[0][0][0]=",

obs[0][0][0]) stepIdx=0 while True: stepIdx += 1 print("Step: ", stepIdx)

actions = []

for ag in range(agent_num):

A = choose_action(obs[ag][0][0], q_table[ag])

if A == 'right':

act=1

else:

act=0

actions.append(act)

print("---actions: ", actions) next_obs, reward, done, _ = env.step(actions) print("---next_obs, reward,

done:", next_obs[-2:], reward[-2:], done[-2:]) q_predict=[] for

ag in range(agent_num):

if(actions[ag]==0):

q_predict.append(q_table[ag].loc[obs[ag][0][0],

"left"])

else:

q_predict.append(q_table[ag].loc[obs[ag][0][0],

"right"])

#print("next_obs[-2:][ag][0][0]=",

next_obs[-2:][ag][0][0])

#print("reward[-2:][ag]=",

reward[-2:][ag])

#print("q_table[ag].iloc[next_obs[-2:][ag][0][0],

:].max()=", q_table[ag].iloc[next_obs[-2:][ag][0][0], :].max())

if next_obs[-2:][ag][0][0]

!= 5:

q_target = reward[-2:][ag]

+ GAMMA * q_table[ag].iloc[next_obs[-2:][ag][0][0], :].max()

else:

q_target = reward[-2:][ag] # next state is

terminal

#print("q_target=",

q_target, "q_predict[ag]=",

q_predict[ag])

if(actions[ag]==0):

q_table[ag].loc[obs[ag][0][0], "left"] += ALPHA * (q_target - q_predict[ag])

#print("q_table[ag].loc[obs[ag][0][0], 'left']=", q_table[ag].loc[obs[ag][0][0], "left"])

else:

q_table[ag].loc[obs[ag][0][0], "right"] += ALPHA * (q_target - q_predict[ag])

#print("q_table[ag].loc[obs[ag][0][0], 'right']=", q_table[ag].loc[obs[ag][0][0], "right"]) obs = next_obs[-2:] # move to next state #print("111 obs=", obs, " obs[0][0][0]=",

obs[0][0][0])

ok=1

for ag in range(agent_num):

if(done[-2:][ag]==False):

ok=0

break

if(ok==1): print("total steps:", stepIdx)

for ag in range(agent_num):

print("q_table[",

ag, "]=", q_table[ag])

break time.sleep(5) currIt += 1 if currIt == iterationNum:

break

except KeyboardInterrupt: print("Ctrl-C

-> Exit") finally: env.close() print("Done") |

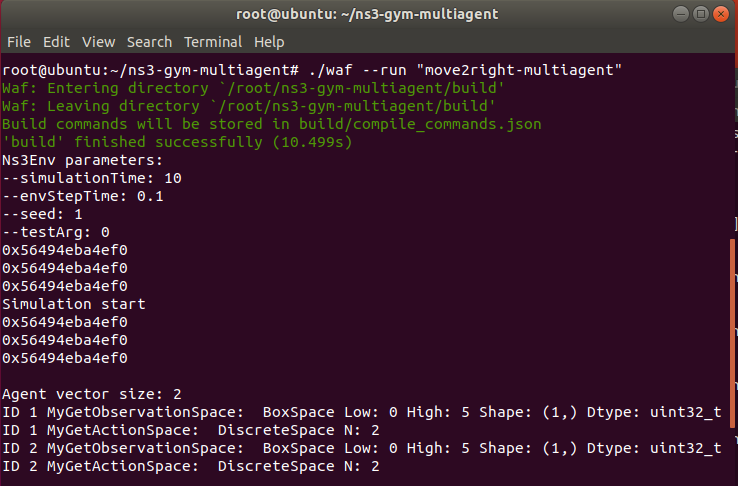

[Execution]

Open one terminal.

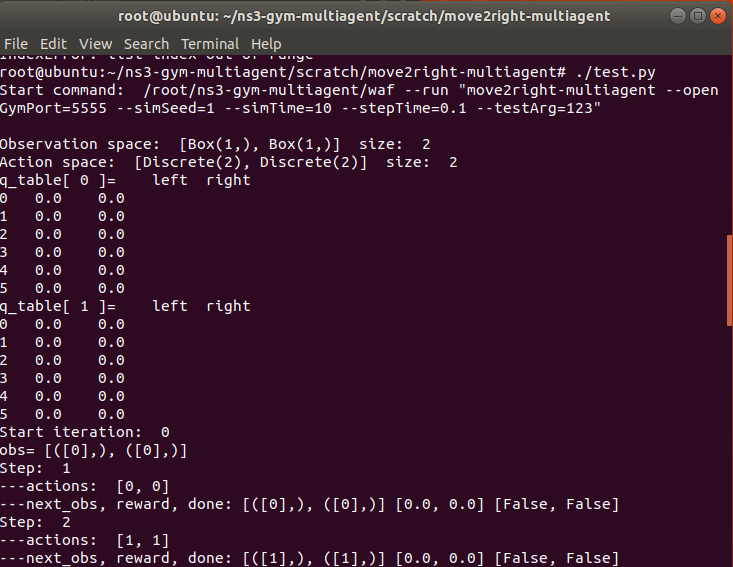

Open another terminal

…….

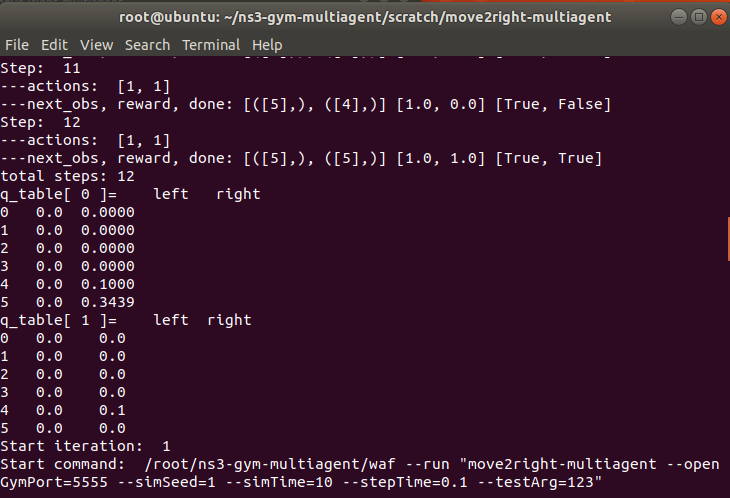

For the first run, these two agent needs 12 steps to finish the job.

…………………

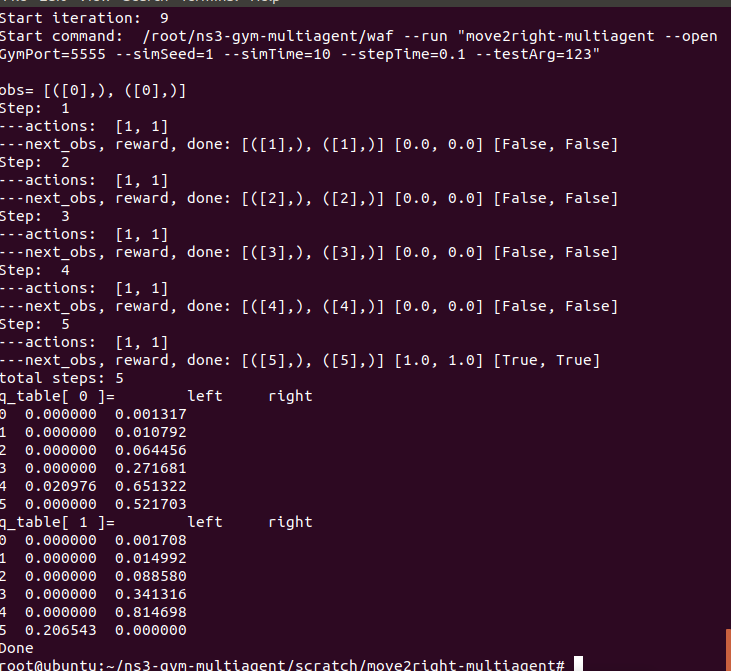

After few runs, two agents just need 5 steps to finish the job.

Last

Modified: 2022/11/15 done

[Author]

Dr. Chih-Heng Ke

Department of Computer

Science and Information Engineering, National Quemoy University, Kinmen,

Taiwan

Email: smallko@gmail.com