Contention window optimization using reinforcement learning (multi-agent version)

Please refer to my paper,

C. H. Ke and L. Astuti, "Applying Deep Reinforcement Learning

to Improve Throughput and Reduce Collision Rate in IEEE 802.11 Networks,"

KSII Transactions on Internet and Information Systems, vol. 16, no. 1, pp.

334-349, 2022. DOI: 10.3837/tiis.2022.01.019. (SCI) (code)

(single-agent)

C. H. Ke and L. Astuti, “Applying Multi-Agent Deep Reinforcement Learning for Contention Window

Optimization to Enhance Wireless Network Performance”, ICT Express (SCI)

For more information.

In this lab, I will user PARL to optimize the contention

window to enhance CSMA/CA performance.

p.s. Single agent: the agent is placed at the

access points. When the agent decides the contention window, the contention

windows size will be sent to the mobile stations via beacon packet. When the

mobile station receives the beacon, it will set its contention window

accordingly.

Multiagent version: the agent is placed at each

mobile station. The mobile station will send its throughput to the AP, the AP

will sum up all the throughputs and send the aggregate throughput back to the

mobile station. The mobile throughput will use the aggregate throughput as the

reward to get the contention window size. For the current python code, we

assume that each mobile station has the same condition. (The same distance from

the access point. The same antenna gain….). In the future, I will use NS3 to do

more real simulations.

CSMA/CA (802.11b) (no reinforcement learning)

|

import numpy as np import random _n=50 # number of nodes _simTime=500 # sec rate=11 # 11, 5.5, 2 or 1 Mbps _cwmin=32 _cwmax=1024 SIFS=10 DIFS=50 EIFS=SIFS+DIFS+192+112 SLOT=20 M=1000000 _pktSize=1000 # bytes stat_succ=0 stat_coll=0 stat_pkts=np.zeros(_n) cw=np.zeros(_n) bo=np.zeros(_n) now=0.0 def init_bo(): for i

in range(0,_n): cw[i]=_cwmin bo[i]=random.randint(0,_cwmax)%cw[i]

#print("cw[",i,"]=",cw[i]," bo[",i,"]=",bo[i]) def Tdata(): global rate

time=192+((_pktSize+28)*8.0)/rate return time def Tack(): time=192+(14*8.0)/1 return time def getMinBoAllStationsIndex(): index=0 min=bo[index] for i

in range(0,_n): if

bo[i]<min:

index=i

min=bo[index] return index def getCountMinBoAllStations(min): count=0 for i

in range(0,_n):

if(bo[i]==min):

count+=1 return count def subMinBoFromAll(min,count): global _cwmin,_cwmax for i

in range(0,_n): if

bo[i]<min:

print("<Error> min=",min," bo=",bo[i])

exit(1)

if(bo[i]>min):

bo[i] = bo[i]- min -1 elif bo[i]==min:

if count==1:

cw[i]=_cwmin

bo[i] = random.randint(0, _cwmax) % cw[i]

elif count>1:

if(cw[i]<_cwmax):

cw[i]*=2

else:

cw[i]=_cwmax

bo[i] = random.randint(0, _cwmax) % cw[i]

else:

print("<Error> count=",count)

exit(1) def setStats(min,index,count): global stat_succ,stat_coll if count==1: stat_pkts[index]+=1 stat_succ+=1 else: stat_coll+=1

for i in range(0,_n):

if bo[i]<min:

print("<Error> min=", min, " bo=",

bo[i])

exit(1)

#elif bo[i]==min:

#

print("Collision with min=", min) def setNow(min,count): global M, now, SIFS, DIFS,

EIFS, SLOT if count==1:

now+=min*SLOT/M

now+=Tdata()/M

now+=SIFS/M

now+=Tack()/M

now+=DIFS/M elif

count>1:

now+=min*SLOT/M

now+=SIFS/M

now+=Tdata()/M

now+=EIFS/M else:

print("<Error> count=", count)

exit(1) def resolve(): index=getMinBoAllStationsIndex() min=bo[index] count=getCountMinBoAllStations(min) setNow(min,

count) setStats(min,

index, count) subMinBoFromAll(min,

count) def printStats(): print("\nGeneral Statistics\n") print("-"*50) numPkts=0 for i

in range(0,_n): numPkts+=stat_pkts[i] print("Total num of

packets:", numPkts) print("Collision

rate:", stat_coll/(stat_succ+stat_coll)*100,

"%") print("Aggregate

Throughput:", numPkts*(_pktSize*8.0)/now) def main(): global now, _simTime, DIFS, M random.seed(1) init_bo() now+=DIFS/M while now < _simTime:

resolve() printStats() main() |

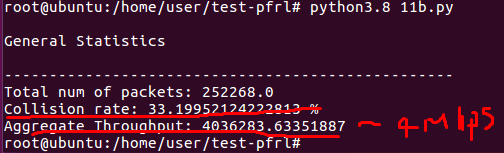

Execution

Collision Rate:~33%, Throughput:~4Mbps for 50

nodes. (11b)

CSMA/CA (802.11a) (no reinforcement learning)

|

import numpy as np import random import math #reference:

https://github.com/cecyliaborek/DCF-NumPy-simulation/blob/master/simulation.py _n=50 # number of nodes _simTime=500 # sec rate=54 #Mbps control_rate=24 #Mbps _cwmin=16 _cwmax=1024 SIFS=16 DIFS=34 SLOT=9 M=1000000 _pktSize=1000 # bytes stat_succ=0 stat_coll=0 stat_pkts=np.zeros(_n) cw=np.zeros(_n) bo=np.zeros(_n) now=0.0 def init_bo(): for i

in range(0,_n): cw[i]=_cwmin bo[i]=random.randint(0,_cwmax)%cw[i]

#print("cw[",i,"]=",cw[i]," bo[",i,"]=",bo[i]) def Tdata(): global rate, _pktSize # dictionary: (data rate,

bits per symbol) bits_per_symbol

= dict([(6, 48), (9, 48), (12, 96),

(18,

96), (24, 192), (36, 192), (48, 288), (54, 288)])

ofdm_preamble

= 16 # us ofdm_signal

= 24 # bits ofdm_signal_duration

= ofdm_signal / (control_rate) # us service = 16 # bits tail = 6 # bits mac_header

= 36 * 8 # bits mac_tail

= 4 * 8 # bits mac_frame

= mac_header + _pktSize *

8 + mac_tail

# bits padding = (math.ceil((service + mac_frame

+ tail) / bits_per_symbol[rate]) * bits_per_symbol[rate]) - (service + mac_frame

+ tail) # bits data_duration

= ofdm_preamble + ofdm_signal_duration

+ (service + mac_frame + tail + padding) /

(rate) # us return data_duration def Tack(): global contro_rate ofdm_preamble

= 16 # us ofdm_signal

= 24 # bits ofdm_signal_duration

= ofdm_signal / (control_rate) # us service = 16 # bits tail = 6 # bits # ack frame ack = 14 * 8 # bits ack_duration

= ofdm_preamble + ofdm_signal_duration

+ \

(service + ack + tail) / (control_rate) # us return ack_duration #EIFS=SIFS+ACK+DIFS EIFS=SIFS+DIFS+Tack() def getMinBoAllStationsIndex(): index=0 min=bo[index] for i

in range(0,_n): if

bo[i]<min:

index=i

min=bo[index] return index def getCountMinBoAllStations(min): count=0 for i

in range(0,_n):

if(bo[i]==min):

count+=1 return count def subMinBoFromAll(min,count): global _cwmin,_cwmax for i

in range(0,_n): if

bo[i]<min:

print("<Error> min=",min," bo=",bo[i])

exit(1)

if(bo[i]>min):

bo[i] = bo[i]- min -1 elif bo[i]==min:

if count==1:

cw[i]=_cwmin

bo[i] = random.randint(0, _cwmax) % cw[i]

elif count>1:

if(cw[i]<_cwmax):

cw[i]*=2

else:

cw[i]=_cwmax

bo[i] = random.randint(0, _cwmax) % cw[i]

else:

print("<Error> count=",count)

exit(1) def setStats(min,index,count): global stat_succ,stat_coll if count==1: stat_pkts[index]+=1 stat_succ+=1 else: stat_coll+=1

for i in range(0,_n):

if bo[i]<min:

print("<Error> min=", min, " bo=",

bo[i])

exit(1)

#elif bo[i]==min:

#

print("Collision with min=", min) def setNow(min,count): global M, now, SIFS, DIFS,

EIFS, SLOT if count==1:

now+=min*SLOT/M

now+=Tdata()/M

now+=SIFS/M

now+=Tack()/M

now+=DIFS/M elif

count>1:

now+=min*SLOT/M

now+=SIFS/M

now+=Tdata()/M

now+=EIFS/M else:

print("<Error> count=", count)

exit(1) def resolve(): index=getMinBoAllStationsIndex() min=bo[index] count=getCountMinBoAllStations(min) setNow(min,

count) setStats(min,

index, count) subMinBoFromAll(min,

count) def printStats(): print("\nGeneral Statistics\n") print("-"*50) numPkts=0 for i

in range(0,_n): numPkts+=stat_pkts[i] print("Total num of

packets:", numPkts) print("stat_coll:", stat_coll,

" stat_succ:", stat_succ) collision_rate=stat_coll*1.0/(stat_succ+stat_coll)*100 print("Collision

rate:", collision_rate, "%") print("Aggregate

Throughput:", numPkts*(_pktSize*8.0)/now) def main(): global now, _simTime, DIFS, M random.seed(1) init_bo() now+=DIFS/M while now < _simTime:

resolve() printStats() main() |

Exection:

Collision Rate:~38%, Throughput:~19Mbps for 50

nodes. (11a)

Multiagent.py

|

import numpy as np import random import os import parl from parl import

layers import copy import paddle.fluid

as fluid import collections MEMORY_SIZE = 20000 MEMORY_WARMUP_SIZE = 100 BATCH_SIZE = 10 LEARNING_RATE = 0.001 GAMMA = 0.9 pre_time=0.0 pre_stat_succ=0 pre_stat_coll=0 _n=50 # number of nodes _simTime=2000 # sec rate=11 # 11, 5.5, 2 or 1 Mbps _cwmin=32 _cwmax=1024 SIFS=10 DIFS=50 EIFS=SIFS+DIFS+192+112 SLOT=20 M=1000000 _pktSize=1000 # bytes stat_succ=0 stat_coll=0 sta_coll=np.zeros(_n) #number of failed

transmission for each station stat_pkts=np.zeros(_n) cw=np.zeros(_n) bo=np.zeros(_n) sta_cw=np.zeros(_n) now=0.0 class Model(parl.Model): def __init__(self,

act_dim):

hid1_size = 128

hid2_size = 128

self.fc1 = layers.fc(size=hid1_size, act='relu')

self.fc2 = layers.fc(size=hid2_size, act='relu')

self.fc3 = layers.fc(size=act_dim, act=None) def value(self, obs): h1

= self.fc1(obs) h2

= self.fc2(h1) Q

= self.fc3(h2)

return Q class DQN(parl.Algorithm): def __init__(self,

model, act_dim=None, gamma=None, lr=None): self.model = model self.target_model = copy.deepcopy(model)

assert isinstance(act_dim,

int) assert

isinstance(gamma, float)

assert isinstance(lr,

float) self.act_dim = act_dim self.gamma = gamma

self.lr = lr def predict(self, obs):

return self.model.value(obs) def learn(self, obs, action, reward, next_obs,

terminal): next_pred_value = self.target_model.value(next_obs) best_v = layers.reduce_max(next_pred_value, dim=1) best_v.stop_gradient = True

terminal = layers.cast(terminal, dtype='float32')

target = reward + (1.0 - terminal) * self.gamma

* best_v pred_value = self.model.value(obs) action_onehot = layers.one_hot(action,

self.act_dim) action_onehot = layers.cast(action_onehot, dtype='float32') pred_action_value = layers.reduce_sum(

layers.elementwise_mul(action_onehot,

pred_value), dim=1)

cost = layers.square_error_cost(pred_action_value, target)

cost = layers.reduce_mean(cost)

optimizer = fluid.optimizer.Adam(learning_rate=self.lr) optimizer.minimize(cost)

return cost def sync_target(self):

self.model.sync_weights_to(self.target_model) class Agent(parl.Agent): def __init__(self,

algorithm,

obs_dim,

act_dim,

e_greed=0.1,

e_greed_decrement=0):

assert isinstance(obs_dim,

int)

assert isinstance(act_dim,

int) self.obs_dim = obs_dim self.act_dim = act_dim super(Agent,

self).__init__(algorithm) self.global_step = 0 self.update_target_steps = 200 self.e_greed = e_greed self.e_greed_decrement = e_greed_decrement def build_program(self): self.pred_program = fluid.Program() self.learn_program = fluid.Program()

with fluid.program_guard(self.pred_program):

obs = layers.data(

name='obs', shape=[self.obs_dim],

dtype='float32')

self.value = self.alg.predict(obs)

with fluid.program_guard(self.learn_program):

obs = layers.data(

name='obs', shape=[self.obs_dim],

dtype='float32')

action = layers.data(name='act', shape=[1], dtype='int32') reward

= layers.data(name='reward', shape=[], dtype='float32')

next_obs = layers.data(

name='next_obs', shape=[self.obs_dim],

dtype='float32')

terminal = layers.data(name='terminal',

shape=[], dtype='bool') self.cost = self.alg.learn(obs, action, reward, next_obs,

terminal) def sample(self, obs):

sample = np.random.rand() if

sample < self.e_greed:

act = np.random.randint(self.act_dim)

else:

act = self.predict(obs) self.e_greed = max(

0.01, self.e_greed - self.e_greed_decrement)

return act def predict(self, obs): obs = np.expand_dims(obs, axis=0) pred_Q = self.fluid_executor.run(

self.pred_program,

feed={'obs': obs.astype('float32')},

fetch_list=[self.value])[0] pred_Q = np.squeeze(pred_Q, axis=0)

act = np.argmax(pred_Q)

return act def learn(self, obs, act, reward, next_obs, terminal): if

self.global_step % self.update_target_steps

== 0:

self.alg.sync_target() self.global_step += 1

act = np.expand_dims(act, -1)

feed = {

'obs': obs.astype('float32'),

'act': act.astype('int32'),

'reward': reward,

'next_obs': next_obs.astype('float32'),

'terminal': terminal }

cost = self.fluid_executor.run(

self.learn_program, feed=feed, fetch_list=[self.cost])[0]

return cost class ReplayMemory(object): def __init__(self,

max_size): self.buffer = collections.deque(maxlen=max_size) def append(self, exp): self.buffer.append(exp) def sample(self, batch_size): mini_batch = random.sample(self.buffer, batch_size) obs_batch, action_batch, reward_batch, next_obs_batch, done_batch = [], [], [], [], []

for experience in mini_batch:

s, a, r, s_p, done = experience

obs_batch.append(s)

action_batch.append(a)

reward_batch.append(r)

next_obs_batch.append(s_p)

done_batch.append(done)

return np.array(obs_batch).astype('float32'), \

np.array(action_batch).astype('float32'), np.array(reward_batch).astype('float32'),\

np.array(next_obs_batch).astype('float32'), np.array(done_batch).astype('float32') def __len__(self):

return len(self.buffer) def init_bo(): global sta_cw for i

in range(0,_n): sta_cw[i]=_cwmin cw[i]= sta_cw[i] bo[i]=random.randint(0,_cwmax)%cw[i]

#print("cw[",i,"]=",cw[i]," bo[",i,"]=",bo[i]) def Trts(): time=192+(20*8)/1 return time def Tcts(): time=192+(14*8)/1 return time def Tdata(): global rate

time=192+((_pktSize+28)*8.0)/rate return time def Tack(): time=192+(14*8.0)/1 return time def getMinBoAllStationsIndex(): index=0 min=bo[index] for i

in range(0,_n): if

bo[i]<min:

index=i

min=bo[index] return index def getCountMinBoAllStations(min): global sta_coll count=0 for i

in range(0,_n):

if(bo[i]==min):

count+=1 if count>1: for i in range(0,_n): if(bo[i]==min):

sta_coll[i]+=1 return count def subMinBoFromAll(min,count): global _cwmin,_cwmax,sta_cw for i

in range(0,_n): if

bo[i]<min:

print("<Error> min=",min," bo=",bo[i])

exit(1)

if(bo[i]>min):

bo[i]-=min elif bo[i]==min:

if count==1: # contention window is only decided by the RL result

cw[i]=sta_cw[i]

bo[i] = random.randint(0, _cwmax) % cw[i] elif

count>1:

cw[i]=sta_cw[i]

bo[i] = random.randint(0, _cwmax) % cw[i]

else:

print("<Error> count=",count)

exit(1) def setStats(min,index,count): global stat_succ,stat_pkts,stat_coll if count==1: stat_pkts[index]+=1 stat_succ+=1 else: stat_coll+=1

for i in range(0,_n):

if bo[i]<min:

print("<Error> min=", min, " bo=",

bo[i])

exit(1) #elif bo[i]==min:

#

print("Collision with min=", min) def setNow(min,count): global M, now, SIFS, DIFS,

EIFS, SLOT if count==1:

now+=min*SLOT/M

now+=Tdata()/M

now+=SIFS/M now+=Tack()/M

now+=DIFS/M elif

count>1:

now+=min*SLOT/M

now+=SIFS/M

now+=Tdata()/M

now+=EIFS/M else:

print("<Error> count=", count)

exit(1) def resolve(): index=getMinBoAllStationsIndex() min=bo[index] count=getCountMinBoAllStations(min) setNow(min,

count) setStats(min,

index, count) subMinBoFromAll(min,

count) def printStats(): global stat_coll,

stat_succ print("\nGeneral Statistics\n") print("-"*50) print("Collision

rate:", stat_coll/(stat_succ+stat_coll)*100,

"%") print("Aggregate

Throughput:", (stat_succ)*(_pktSize*8.0)/now) def main(): global _n, now, _simTime, stat_succ, stat_coll, pre_stat_succ, pre_stat_coll, sta_coll, _pktSize, pre_time,sta_cw,stat_pkts pre_collision_rate=0.0 pre_sta_succ=np.zeros(_n) pre_sta_coll=np.zeros(_n) sta_coll_rate=np.zeros(_n) pre_sta_coll_rate=np.zeros(_n) sta_thr=np.zeros(_n) random.seed(1) np.random.seed(1) init_bo() obs_dim=2 act_dim=6 print("obs_dim=",obs_dim,"act_dim=",act_dim) mymodel=[] myalgorithm=[] myagent=[] myrpm=[] for i

in range(_n): mymodel.append(Model(act_dim=act_dim)) myalgorithm.append(DQN(mymodel[i], act_dim=act_dim,

gamma=GAMMA, lr=LEARNING_RATE)) myagent.append(Agent( myalgorithm[i], obs_dim=obs_dim, act_dim=act_dim, e_greed=0.1, e_greed_decrement=1e-6)) myrpm.append(ReplayMemory(MEMORY_SIZE)) step=0 show=0 state=[0.0, 0.0] sta_state=[] sta_reward=[] sta_obs=[] sta_next_obs=[] sta_action=[] for i

in range(_n): sta_state.append(state) sta_obs.append(np.array(sta_state[i])) sta_next_obs.append(np.array(sta_state[i])) sta_reward.append(0) sta_action.append(0) k=0 init_bo()

now+=DIFS/M while now < _simTime:

#print("now=", now) while k < MEMORY_WARMUP_SIZE:

k+=1

#print("#"*50)

for i in range(_n):

sta_obs[i]=np.array(sta_state[i])

sta_action[i]=myagent[i].sample(sta_obs[i])

sta_cw[i]=pow(2, 5

+ sta_action[i])

#print("sta_obs[",i,"]=",sta_obs[i])

#print("sta_action[",i,"]=",sta_action[i])

#print("sta_cw[",i,"]=",sta_cw[i])

#print("@"*50)

t1=now

while True:

resolve()

if now - t1 > 0.1: break

for i in range(0, _n):

if stat_pkts[i] + sta_coll[i] - pre_sta_succ[i] - pre_sta_coll[i] == 0:

sta_coll_rate[i]=0.0

else:

sta_coll_rate[i]=(sta_coll[i] - pre_sta_coll[i]) / (stat_pkts[i] + sta_coll[i] - pre_sta_succ[i] - pre_sta_coll[i]) * 100

thr = (stat_succ -

pre_stat_succ) * (_pktSize

* 8.0) / (now - pre_time)

reward = thr / rate / M

sta_reward[i] = thr / rate / M

#sta_thr[i]=(stat_pkts[i] - pre_sta_succ[i]) * (_pktSize * 8.0) / (now -

pre_time)

#sta_reward[i] = sta_thr[i]

/ rate / M

#print("sta_coll_rate[",i,"]=",sta_coll_rate[i])

#print("sta_thr[",i,"]=",sta_thr[i])

#print("sta_reward[",i,"]=",sta_reward[i])

next_state=[]

next_state.append(sta_coll_rate[i])

next_state.append(pre_sta_coll_rate[i])

#print("next_state=", next_state)

pre_sta_succ[i] = stat_pkts[i]

pre_sta_coll[i] = sta_coll[i]

pre_sta_coll_rate[i]

= sta_coll_rate[i]

pre_stat_succ = stat_succ

sta_next_obs[i]=np.array(next_state)

done = False

myrpm[i].append((sta_obs[i], sta_action[i], sta_reward[i], sta_next_obs[i], done))

sta_state[i] = next_state

#print("myrpm[",i,"]=",myrpm[i])

#print("sta_state[",i,"]=",sta_state[i])

pre_time = now #print("!!!!!!!!!!!!memory_warm_up

finished!!!!!!!!!!!!") if step%5==0:

for i in range(0, _n):

(batch_obs, batch_action,

batch_reward, batch_next_obs,

batch_done) = myrpm[i].sample(BATCH_SIZE)

train_loss = myagent[i].learn(batch_obs, batch_action, batch_reward, batch_next_obs, batch_done)

for i in range(_n):

sta_obs[i]=np.array(sta_state[i])

sta_action[i]=myagent[i].sample(sta_obs[i])

sta_cw[i]=pow(2, 5

+ sta_action[i]) t1=now while True:

resolve() if

now - t1 > 0.1:

break for i in range(0, _n):

if stat_pkts[i] + sta_coll[i] - pre_sta_succ[i] - pre_sta_coll[i] == 0:

sta_coll_rate[i]=0.0

else:

sta_coll_rate[i]=(sta_coll[i] - pre_sta_coll[i]) / (stat_pkts[i] + sta_coll[i] - pre_sta_succ[i] - pre_sta_coll[i]) * 100

thr = (stat_succ -

pre_stat_succ) * (_pktSize

* 8.0) / (now - pre_time)

reward = thr / rate / M

sta_reward[i] = thr / rate / M

next_state=[]

next_state.append(sta_coll_rate[i])

next_state.append(pre_sta_coll_rate[i])

pre_sta_succ[i] = stat_pkts[i]

pre_sta_coll[i] = sta_coll[i]

pre_sta_coll_rate[i]

= sta_coll_rate[i]

sta_next_obs[i]=np.array(next_state)

done = False

myrpm[i].append((sta_obs[i], sta_action[i], sta_reward[i], sta_next_obs[i], done))

sta_state[i] = next_state pre_stat_succ=stat_succ pre_time = now step += 1 printStats() now=pre_time=0.0 state=[0.0, 0.0] sta_state=[] sta_reward=[] sta_obs=[] sta_next_obs=[] sta_action=[] pre_collision_rate=0.0 pre_sta_succ=np.zeros(_n) pre_sta_coll=np.zeros(_n) sta_coll_rate=np.zeros(_n) pre_sta_coll_rate=np.zeros(_n) sta_thr=np.zeros(_n) sta_cw=np.zeros(_n) stat_succ=0

stat_coll=0 pre_stat_succ

= 0 sta_coll=np.zeros(_n) stat_pkts=np.zeros(_n) for i

in range(_n): sta_state.append(state) sta_obs.append(np.array(sta_state[i])) sta_next_obs.append(np.array(sta_state[i])) sta_reward.append(0) sta_action.append(0) now+=DIFS/M while now < 5:

print("Evaluation, time=", now) for i in range(_n):

sta_obs[i]=np.array(sta_state[i])

sta_action[i]=myagent[i].predict(sta_obs[i])

sta_cw[i]=pow(2, 5

+ sta_action[i]) t1=now while True:

resolve()

if now - t1 > 0.1:

break for i in range(0, _n):

if stat_pkts[i] + sta_coll[i] - pre_sta_succ[i] - pre_sta_coll[i] == 0:

sta_coll_rate[i]=0.0

else:

sta_coll_rate[i]=(sta_coll[i] - pre_sta_coll[i]) / (stat_pkts[i] + sta_coll[i] - pre_sta_succ[i] - pre_sta_coll[i]) * 100

thr = (stat_succ -

pre_stat_succ) * (_pktSize

* 8.0) / (now - pre_time)

reward = thr / rate / M sta_reward[i] = thr / rate / M

next_state=[]

next_state.append(sta_coll_rate[i])

next_state.append(pre_sta_coll_rate[i])

pre_sta_succ[i] = stat_pkts[i]

pre_sta_coll[i] = sta_coll[i]

pre_sta_coll_rate[i]

= sta_coll_rate[i]

sta_next_obs[i]=np.array(next_state)

done = False

myrpm[i].append((sta_obs[i], sta_action[i], sta_reward[i], sta_next_obs[i], done))

sta_state[i] = next_state pre_stat_succ = stat_succ pre_time = now step += 1 printStats() main() |

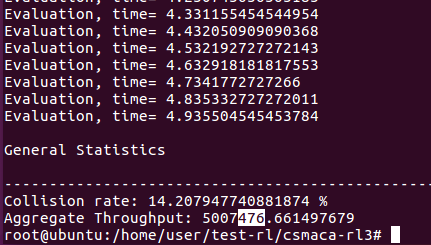

Execution

If we are using multiagent, we can get around

5Mbps throughput.

![]()

Dr.

Chih-Heng Ke (柯志亨)

Department

of Computer Science and Information Engineering, National Quemoy University,

Kinmen, Taiwan

Email: smallko@gmail.com